Published on the 05/07/2016 | Written by Beverley Head

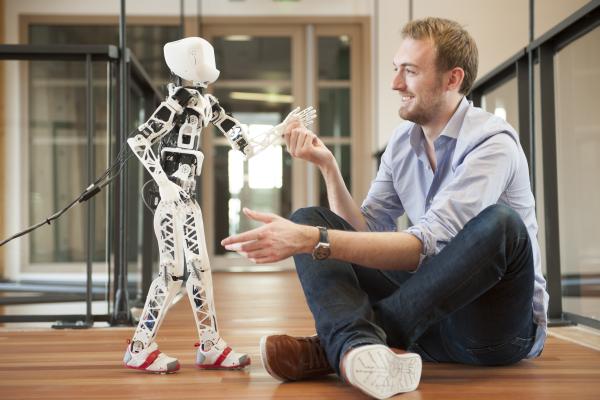

As robots emerge from laboratories and science fiction to take on roles in factories, transport systems, hospitals and households, humans are having to come to terms with them…

Dr Kate Darling is a leading specialist in robot ethics at the Massachusetts Institute of Technology, who visited Australia for Agile Australia.

She studies the psychology of how humans interact with robots, and while that may seem esoteric it’s important in terms of understanding how robots are used – or abused – by human workforces. For example early studies have shown how soldiers working with bomb disposal robots have been unwilling to send them back into the field in case they are blown up.

“Military personnel become very attached to tools they use – like bomb disposal units – the fact that robots are saving their lives.

“They give them funerals with gun salutes. But it’s also a bit worrying because the robots are not intended for this. If you have people hesitating for even a second to use the robot the way it was intended then that can be dangerous,” said Darling who was speaking at an event organised by Slattery IT.

Gartner has already issued warnings to CIOs and technology teams not to anthropomorphise artificial intelligence or cognitive computing platforms, recommending that terms such as “smart machines” be encouraged instead – in part to manage enterprise expectations.

But according to Darling; “This is biologically hardwired in us. The design of robots is increasingly moving toward pushing those buttons in people and engaging with people, we will increasingly view robots as something that’s alive – but it’s not.”

She said her research was intended to analyse how people anthropomorphise robots, and the impact that has on how the robot is used. Naming a robot seems to have a significant impact, and can help with human acceptance of a robot in a workplace. However naming it also means people will potentially protect the robot rather than use it for what it has been designed.

“Robotics is really important right now. Robots are moving from behind scenes in factories in cages and to new areas of world, to transportation systems and hospitals and households – and starting to interact with people in a way we haven’t seen before.”

And while this opened up new business opportunities – deploying robots in prisons, in aged care, in child care – Darling said that it was important people grappled with the ethics of doing that, and also the impact it might have on humans.

“Could it affect or impede development in children? The same way we don’t let small children play violent video games, but robots bring it to a new level because of the physicality of our interactions.”

She said that she was excited about the potential applications of robots in health and education, for example to bridge communication between autistic children and adults or to teach language.

“But there are a lot of uses that concern me and other people working in robot ethics. Corporations are the ones that will be controlling the technology and their interests may not always line up with those of consumers.

“So it might be cool to have a robot vacuum cleaner – but it might be collecting a lot of data about your home as they get smarter. There are a lot of privacy concerns.

“And are we using robots not as a supplement to people but replacing them – for example in elder care or childcare situations?

“There is the question of emotional manipulation. Corporations or governments which control the technology – those would be my main concerns.”

No such problems for the Dallas Police.