Published on the 30/10/2018 | Written by Pat Pilcher

Humans decide in order to teach the machines…

Here’s a tough question: An AI controlled, autonomous car is about to crash. It can drive into a child, or it can plough into a crowd of senior citizens. It is unable to slow down. Nor can it avoid crashing. So, who should live, and who should die?

This grisly scenario, and equally tough questions, were put to millions of humans in over 200 countries as part of a study conducted by MIT, Harvard University, the University of British Columbia and the Université Toulouse Capitole.

The study was called the Moral Machine, and its results were somewhat alarming. In addition to the no-win scenario outlined above, survey respondents were asked 13 similar questions forcing them to choose between humans and animals, jaywalkers and law-abiding citizens, males and females, obese and underweight. So just what choices did us humans say machines should make in such split-second situations?

“In bad news for blokes, nearly all respondents preferred saving females over males.”

While no good outcomes were possible in many of the questions asked, the results were predictable. The survey’s participants tended to show a bias to saving kids over the elderly, groups of people over individuals, and depressingly, rich over poor (although engineering in the ability to check bank balances before deciding whom to crash into might be a big ask, even for Tesla), females over males, and skinny over obese people.

Interestingly, answers to the survey also varied along cultural lines. Collectivistic cultures like China (where respect for the elderly is a big thing) favoured the aged over youth. Westernised individualistic cultures like Australia, the US, UK and New Zealand opted for scenarios where the highest number of people got saved. Respondents in less developed countries were more predisposed to saving jaywalkers, and in bad news for blokes, nearly all respondents preferred saving females over males (except for Latin America).

The study was designed to help researchers understand what human expectations of AI will be in the future when designing the AI systems powering driverless cars. “There are so many moral decisions we make during the day and don’t realise,” says Moral Machine project lead, Edmond Awad. “In driverless cars, these decisions will have to be implemented ahead of time, and the goal was to open these discussions to the public.”

The advent of AI-powered self-driving vehicles and a push for the greater use of advanced robotics in warfare means that for the first time, humanity is in the unique position of allowing machines to decide who lives and who dies with no human input or supervision. The Moral Machine team is hoping that the results from the study will help open up ‘global conversations to express our preferences to the companies that will design moral algorithms, and to the policymakers that will regulate them’, says Awad. While the scenarios outlined in the study are hypothetical, and self-driving cars have yet to gain the ability to tell rich from poor or even jay-walkers from law-abiding citizens, there is still an odd mix of fascination and unease around AI controlled, self-driving cars.

The ethics of AI need to be carefully and consistently regulated. A poorly coordinated AI ethics regulatory framework could result in discrepancies that could, in turn, lead to complex scenarios in the EU and US where drivers are forced to adjust the AI ethics of their vehicle once they cross a border into a new state.

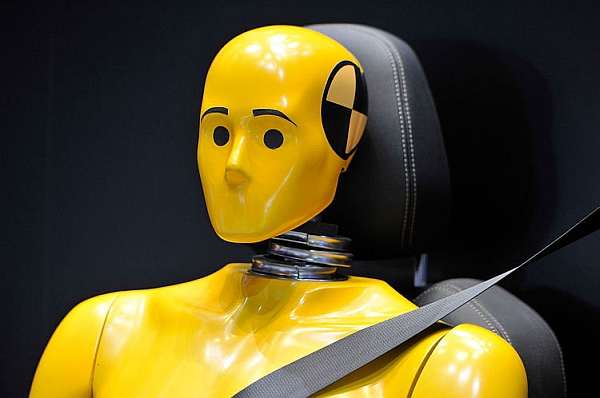

Either way, self-driving cars are coming. Many of the more prominent auto manufacturers are working on AI controlled vehicles and in a recent white paper, the Ministry of Transport said they believe self-driving cars will deliver big improvements in road death statistics;

“We are on the cusp of a revolution that will bring far greater road safety benefits than seatbelts and airbags combined. Autonomous (or driverless) vehicles will take the driver – the single most significant risk factor for the last century – out of the safety equation.”

MoT sentiments aside, anxiety and distrust around AI controlled vehicles still exists and wasn’t helped with two recent fatal road accidents involving autonomous cars. In the US, Walter Huang died when his Tesla Model X crashed into a motorway barrier and burst into flames. Investigators say that at the time of the accident, autopilot was on. Only days before Huang’s fatality, a pedestrian, Elaine Herzberg was killed by an autonomous vehicle in Arizona.

Studies such as the Moral Machine survey are vital as accidents involving self-driving cars are set to grow. The debate around what should guide the moral and ethical judgements made by AI controlled machines is going to be sorely needed as AI becomes more commonplace.