Published on the 26/09/2024 | Written by Heather Wright

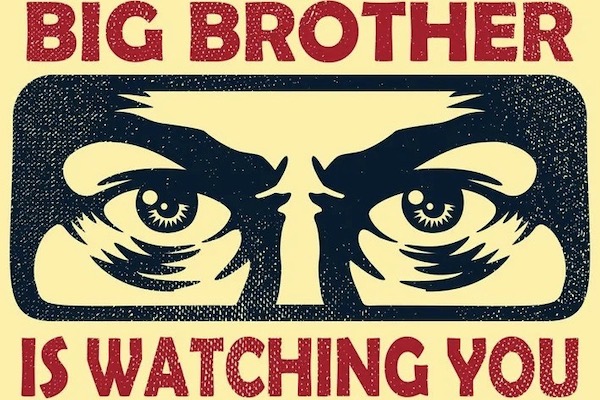

People tracking across the internet…

America’s Federal Trade Commission has lambasted social media and streaming services as ‘vast surveillance’ operations, collecting and sharing more personal information than most users realise, and with lax privacy controls and safeguards.

The comments come after the FTC, which enforces antitrust and consumer protection laws, examined how nine companies including Amazon, Meta, YouTube, X and TikTok owners ByteDance, collect and monetise personal information, how their products are powered by algorithms and impacts on children and teens.

“Troublingly, no platform could provide a comprehensive list of the third parties to which it had disclosed user data.”

Snap, Reddit, WhatsApp and Discord were also among the companies ordered to provide information in 2020 for the project following concerns that the platforms were ‘dangerously opaque’ and ‘shrouded in secrecy’ in their collection of data and the use of algorithms. At the time FTC commissioners said it was ‘alarming’ how little was known about companies ‘that know so much about us’.

Just how much is collected – veritable ‘troves’ of data from and about both users and non-users in ways ‘consumers might not expect’ – is laid out, with the report saying it’s clear self-regulation has been a failure and ‘we must not continue to let the foxes guard the henhouse’.

Information collected – and retained indefinitely – included activities both on and off the platforms and included personal information, demographic information, interests, behaviours and activities elsewhere on the internet. It included information input by users themselves, along with information gathered passively or inferred and information purchased by some companies about users from data brokers and others, including data relating to household income, location and interests.

After collecting vast amounts of personal information, the platforms implemented few guardrails on the disclosure of data and most disseminated the data to an assortment of third parties, FTC chair Lina Khan, who has pursued the platforms for a number of years, says.

“Troublingly, no platform could provide a comprehensive list of the third parties to which it had disclosed user data, and several reported disclosing the data to third parties outside of the US, including foreign adversaries,” Khan says.

Many of the platforms couldn’t tell the FTC how much data they were actually collecting.

The widespread application of algorithms, data analytics and AI to users’ and non-users’ personal information was also noted, with the report saying these technologies ‘powered’ the platforms, providing everything from content recommendation to search, advertising and inferring personal details about users.

Consumers, however lacked any meaningful controls over how personal information was used.

“Overall there was a lack of access, choice, control, transparency, explainability and interpretability relating to the companies’ use of automated systems. There were also differing, inconsistent and inadequate approaches relating to monitoring and testing the use of automated systems.”

The report notes harms including algorithms which may prioritise harmful content, such as dangerous online challenges.

A failure to protect children and teens was also a focus of the report, with many simply asserting they had no child users on their platforms.

The report does not disclose company-by-company findings.

Many of the companies relied on selling advertising services to other businesses based largely on the personal information of their users, helping monetise the data to the tune of billions of dollars a year.

It notes an ‘inherent tension’ between the business models that rely on the collection of user data and the protection of user privacy.

The tech giants and platforms have been under intense scrutiny over their tracking practices and privacy implications for years. In the US there have been a number of proposals for stricter privacy and online safety protections for children, though most have come to little.

In Australia, privacy laws are being reformed though the proposed changes have faced criticism for not going far enough and leaving Australians at the mercy of tracking, targeting and profiling by data brokers and others.

There are also plans for a minimum age limit for children using social media in Australia, with an age verification trial planned.

Unsurprisingly, the platforms themselves were quick to hit back at the report.

X said the study is based on 2020 practices and it’s since improved its practices.

Discord said the report was an important step but lumped ‘very different models into one bucket’ claiming their model was ‘very different’ with strong privacy controls and no feeds for endless scrolling. At the time of the study, Discord did not have a formal online advertising service.

Google meanwhile said it had the strictest privacy policies in the industry and ‘never’ sold people’s personal information or used ‘sensitive’ data to serve ads.

The report makes a number of recommendations for policymakers and companies, including ‘comprehensive federal privacy legislation to limit surveillance, address baseline protections and grant consumers data rights’.

It also calls for companies to limit data collection, implement concrete and enforceable data minimisation and retention policies and limit data sharing. Deleting consumer data when no longer needed, adopting ‘consumer friendly’ privacy policies and not collecting sensitive information through privacy invasive ad tracking technologies were also among the recommendations.