Published on the 01/09/2021 | Written by Heather Wright

OpenAI’s tool to multiply programmers…

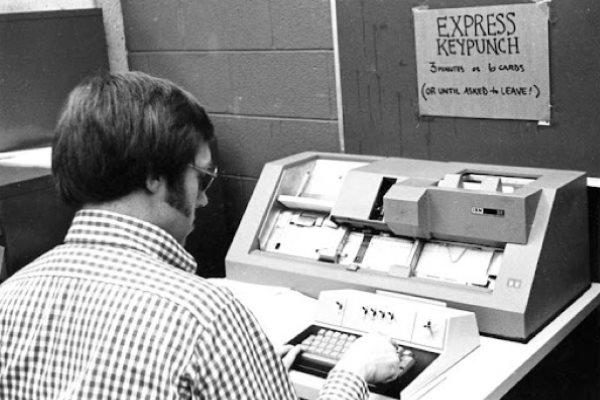

The language of machines, from punch cards to Cobol, Java and Python, has been a crucial requirement for programmers, but now, machines are becoming conversant in the language of the programmers.

AI research company OpenAI – whose backers include Microsoft and Peter Thiel – recently launched a private beta test of its OpenAI Codex system which translates natural language into code.

The offering can interpret simple commands in natural language and turn them into computer code, making it possible to build a natural language interface to existing applications, OpenAI says.

“You can issue commands in English to any piece of software with an API.”

It’s designed to help speed up programming work and potentially help non-programmers get coding.

In a live demo OpenAI co-founders Greg Brockman and Ilya Sutskever demonstrated how the software can be used to build simple websites and rudimentary games, using natural language.

According to OpenAI, Codex is ‘proficient’ in more than a dozen programming languages, though it’s strongest in Python.

“Once a programmer knows what to build, the act of writing code can be thought of as (1) breaking a problem down into simpler problems, and (2) mapping those simple problems to existing code (libraries, APIs, or functions) that already exist,” OpenAI says. “The latter activity is probably the least fun part of programming (and the highest barrier to entry), and it’s where OpenAI Codex excels most.”

OpenAI was founded in 2015 and Codex is a descendant of the company’s GPT-3 a general purpose natural-language machine learning algorithm. Released last year, GPT-3 uses machine learning to produce human-like text, taking a prompt and attempting to complete it. It’s been used to create articles highlighting the tool, along with emails, even poetry and recipes, and to generate code for deep learning in Python.

Brockman says while GPT-3 wasn’t built for programming the applications which most caught people’s attention were the programming ones. The algorithm was then fine-tuned specifically for coding and Codex was born.

As with GPT-3, Codex was built on an expansive dataset of public source code to teach the system how to understand computer code.

“OpenAI Codex has much of the natural language understanding of GPT-3 but it produces working code – meaning you can issue commands in English to any piece of software with an API,” OpenAI says.

There are still kinks and the software is far from infallible, with Codex currently completing around 37 percent of requests.

OpenAI admits that Codex will only be offered for free ‘during the initial period’. Its deal with Microsoft reportedly means the software giant will have exclusive access to parts of Codex.

Codex, or at least an earlier version, is the model powering Copilot on Microsoft-owned development platform Github. As its name suggests, it aims to be a ‘copilot’, or (junior) AI pair programmer, working alongside a human programmer and offering up suggestions from lines of code to entire functions, for a human programmer.

It sparked controversy with some programmers angry about the reportedly blind copying of blocks of code used to train the algorithm, and the ultimate likely profiting by OpenAI of the open-source developers work.