Published on the 02/02/2022 | Written by Heather Wright

Lost revenue, lost customers, lost employees…

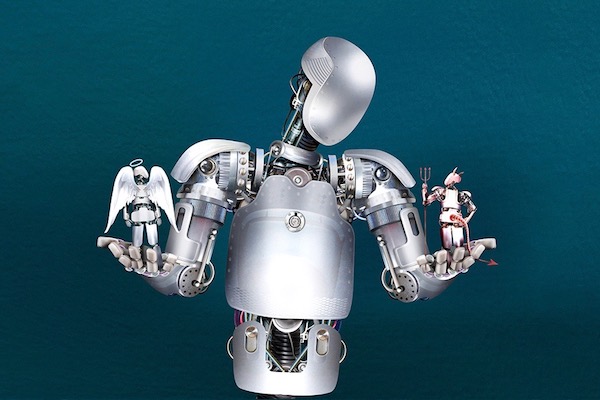

Done right, AI can help businesses transform, optimising processes and services, increasing accuracy and efficiency and potentially accelerating business growth. Done wrong, however, it also has consequences – as an increasing number of companies are discovering.

A DataRobot survey, conducted in collaboration with the World Economic Forum, 36 percent of respondents said their organisations have suffered ‘challenges’ or direct business impact thanks to AI bias in algorithms.

And the damage was significant: 62 percent lost revenue, 61 percent lost customers and 43 percent lost employees.

“Human involvement in AI system remains – and will continue to remain – essential.”

Respondents reported that their algorithms have inadvertently contributed to gender discrimination (32 percent), racial discrimination (29 percent), sexual orientation discrimination (19 percent), and religious discrimination (18 percent).

The State of AI Bias shows those biases exist even though companies have put guardrails, including AI bias or algorithm tests, in place.

The report highlights concerned raised by New Zealand businesses in Qrious’ inaugural State of AI in New Zealand report late last year. That report noted that ethics, bias, transparency and accountability were concerns for nearly half of all respondents, with 47 percent saying businesses don’t have a handle on the legal and ethical implications and 47 percent saying businesses are not transparent enough about AI use.

The DataRobot report highlights that while AI may be gaining credence, the issue of bias remains a very real problem. Indeed, there’s growing nervousness around AI bias, with 54 percent of technology leaders surveyed saying they’re very, or extremely, concerned about bias, up from 42 percent in 2019. Their biggest concerns? Loss of customer trust – something only six percent say they’ve had to deal with so far – and compromised brand reputation, along the potential for increased regulatory scrutiny.

Brandon Purcell, Forrester VP and principal analyst, agrees that AI-based discrimination, even if unintentional, can have dire regulatory, repetitional and revenue impacts.

Numerous instances of AI bias fails have hit the news over recent years, from a health care risk-prediction algorithm which heavily favoured white people over black patients when it came to high risk care management, to the Compas algorithm, used by US courts to predict the likeliness of criminal reoffending – and predicted twice as many false positives for recidivism for black offenders than white.

Then there was Amazon’s sexist hiring algorithm – which replicated existing hiring practices and marked women down – and Australia’s Giggle social media platform and its facial recognition AI fail (what could possibly go wrong with using AI to screen gender?…)

For organisations of all shapes and sizes, AI bias is proving a mine field.

And while organisations might embrace fairness in AI in principle, putting the processes in place to practice it consistently is challenging, and determining the right approach depends on use case and societal context, Purcell says.

The number one challenge to eliminate bias? Understanding the reasons for a specific AI decision.

Forrester has forecast that the market for ‘responsible’ AI solutions will double in 2022.

Srividya Sridharan, Forrester VP and senior research director, says some regulated industries have already started adopting responsible AI solutions that help companies turn AI principles such as fairness and transparency into consistent practices.

“In 2022, we expect the demand for these solutions to extend past these industries to other verticals using AI for critical business operations,” Sridharan says.

She says with this uptick in adoption, existing machine-learning vendors will acquire specialised responsible AI vendors for bias detection, interpretability and model lineage capabilities.

Certainly, those surveyed in the DataRobot report are keen to see technology doing the heavy lifting, with two-thirds saying AI bars guardrails that automatically detect bias in datasets are an important feature in AI platforms.

Companies also reported taking action to avoid AI bars through checking data quality (69 percent), training employees on AI bias and hiring an AI bias or ethics expert (51 percent each), measuring AI decision making factors (50 percent), monitoring when the data changes over time (47 percent), deploying algorithms that detect and mitigate hidden bias (45 percent) and introducing explainable AI tools (35 percent).

DataRobot’s report also looked at attitudes to regulation, finding, perhaps surprisingly, that 81 percent want government regulation in AI.

Deloitte’s State of AI in the Enterprise report late last year noted the importance of creating new processes for AI and ML development. Organisations that strongly agreed that MLOps processes were followed were three times more likely to achieve their goals and four times more likely to report feeling ‘extremely prepared’ for risks associated with AI. They were also three times more confident that they could deploy AI initiative in an ‘ethical, trustworthy way’.

“The complexity (and controversy) around AI bias has made one thing clear: Humans and AI are deeply intertwined,” DataRobot says. “Human involvement in AI system remains – and will continue to remain – essential.

“By leveraging AI experts who know how to optimise both sides of the human-AI coin, organisations can ensure that AI is free from human flaws and humans are free from AI biases.”